The internet is flooded with videos and photos that look real but are anything but. Deepfakes, digitally altered videos that replace faces, change voices, or fabricate entire events, have become a growing concern for social networks.

Platforms like Meta, X, and TikTok are racing to identify what’s genuine before misinformation spreads faster than they can respond.

Let’s take a closer look at how social networks detect and track manipulated media, what technologies power those efforts, and how users can spot fake visuals before sharing them.

The Challenge of Spotting Deepfakes

Deepfakes are built using artificial intelligence models that can learn the details of someone’s face, tone, and movement patterns.

Once trained, those models can generate entirely new content that looks strikingly real. For platforms that host billions of posts per day, catching such manipulations requires more than just manual review.

Some social platforms rely on advanced tools like an AI detector to scan uploaded videos for synthetic patterns that hint at deepfake manipulation.

A single deepfake can spread to millions of people in a few hours. That’s why social networks invest heavily in detection systems that can automatically flag suspicious videos and images before they go viral.

Key Detection Methods Used by Social Networks

1. AI-Powered Content Analysis

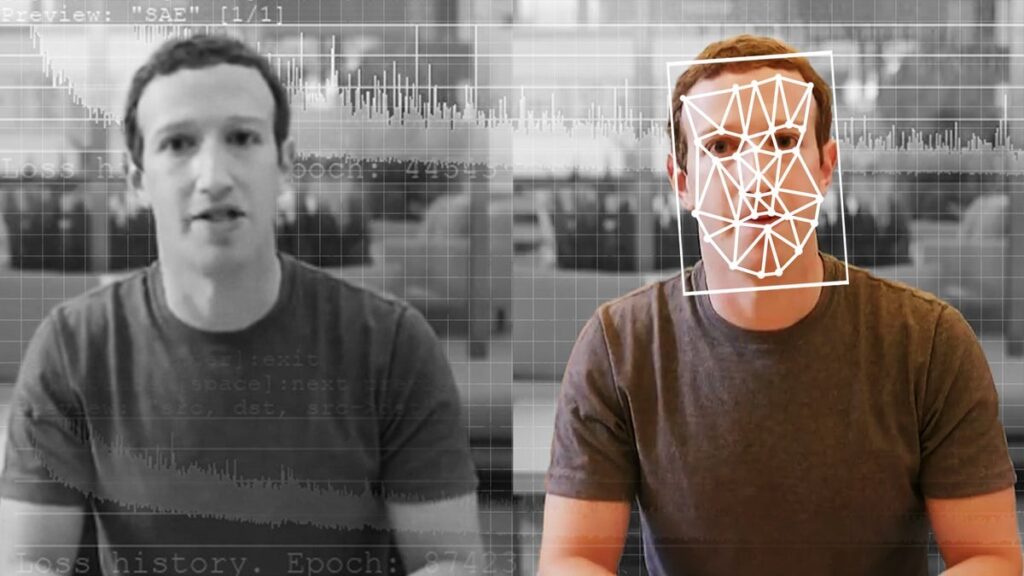

Social networks use machine learning models trained to recognize tiny inconsistencies in visuals. For instance:

- Facial alignment errors: Subtle mismatches in lighting or shadow.

- Pixel-level artifacts: Blurring around the edges of a face or object.

- Audio desynchronization: Lips moving slightly out of sync with speech.

Those indicators are rarely visible to the human eye but can be picked up by deep-learning algorithms trained on thousands of real and fake samples.

2. Digital Watermarking

Some platforms work with content creators and camera manufacturers to include digital signatures or watermarks in authentic videos. Once uploaded, that metadata can confirm whether a file has been altered.

The Coalition for Content Provenance and Authenticity (C2PA), a standard adopted by major media firms, uses cryptographic verification to trace how and where a video was modified.

3. Reverse Hash Matching

When a known fake is discovered, the system stores a hash value (a unique digital fingerprint) of that file. If the same or similar content appears again, the algorithm instantly detects it. That’s similar to how platforms detect copyrighted music or child safety content – it’s fast, scalable, and reliable.

Human Review Still Matters

Automation handles most of the heavy lifting, but human reviewers remain essential. Moderators often step in when AI tools flag borderline content, such as satire or edited clips that might not qualify as deepfakes but could still mislead audiences.

Many platforms have also built partnerships with independent fact-checkers. When a manipulated video gains traction, those teams analyze context, confirm authenticity, and apply visible labels like “Altered Media” or “Synthetic Content.” Those tags give users more context before they share or comment.

Transparency and Accountability

Platforms are learning that users don’t just want detection – they want transparency. That means clear labeling, public reports, and partnerships with researchers who can audit how well detection systems work.

Some networks now publish quarterly reports detailing how many manipulated videos were flagged, removed, or labeled. Others host open challenges that invite developers to test their algorithms against new kinds of fakes. The goal isn’t perfection – it’s accountability and ongoing improvement.

How Users Can Protect Themselves

Even the best systems miss things, so user awareness still plays a big role. Here are some practical ways to avoid falling for synthetic media:

- Check the source. If a clip comes from an unfamiliar account, look for it on verified news outlets or official pages.

- Watch for unnatural movements. Deepfakes often struggle with blinking, facial symmetry, and lighting consistency.

- Listen carefully. Synthetic voices sometimes sound too flat or lack breathing patterns.

- Use verification tools. Apps and browser plugins that analyze video metadata or compare frames can flag manipulated uploads.

The Road Ahead

Detecting manipulated media will always be a moving target. As AI models evolve, so do the techniques used to expose their fingerprints. Social networks are gradually learning to treat authenticity as a core feature, not just a security layer.

For users, the best defense remains skepticism and awareness. A moment’s pause before hitting share can do more to curb misinformation than any algorithm ever could.